Angjoo Kanazawa

Electrical Engineering and Computer SciencesAngjoo Kanazawa is an Assistant Professor in the Department of Electrical Engineering and Computer Sciences at UC Berkeley. She earned her BA in Mathematics and Computer Science from New York University and her PhD in Computer Science at the University of Maryland, College Park.

Spark Award Project

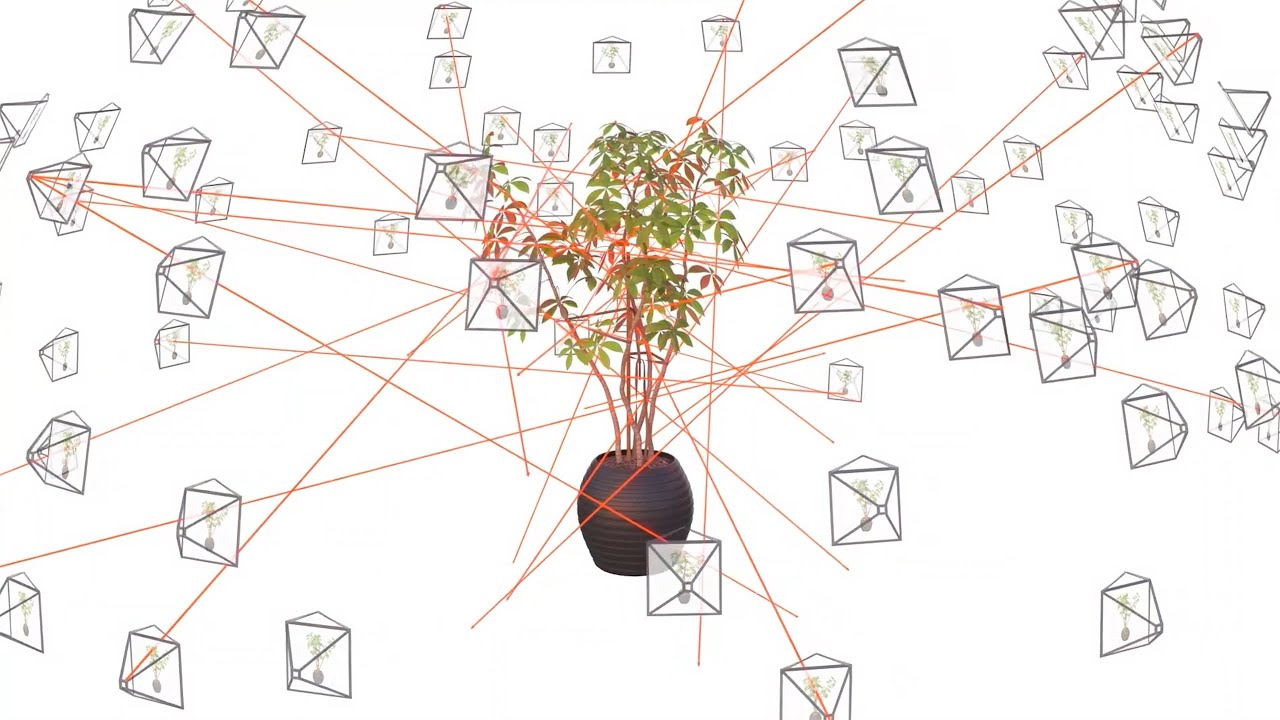

Kanazawa and her team plan to develop a comprehensive AI framework for practical photo-realistic capture and rendering of arbitrary scenes using the concept of volumetric neural representations. Unlike conventional computer graphics or structure-from-motion pipelines, which rely on meshes and textures to describe the scenes, they will develop a framework that recovers the geometry and appearance of scenes using the latest volumetric neural rendering techniques.

Angjoo Kanazawa’s Story

The popular adage that one picture is worth a thousand words is widely attributed to Frederick Barnard, writing in 1921 about the effectiveness of images for conveying information. That 1000:1 ratio is poised to be exponentially expanded this century as we enter a new era of “immersive photography,” where you can quickly convert photos and videos from your smartphone into 360 degree 3D scenes you, your family and friends can explore.

Bakar Fellow Angjoo Kanazawa notes that “since the dawn of civilization, a universal pursuit of humanity has been to capture the reality that surrounds us.” She cites reality-capturing imagery as having played critical roles in human history, from crude paintings on the walls of caves, to the development of photography in the mid-1800s, to the invention of movies in the late 19th century, to the introduction of virtual and augmented reality (VR and AR) in the 20th century. With her 2022 Bakar Fellows Spark Award, Kanazawa intends to add a new milestone to this pursuit – one that is especially user-friendly.

Kanazawa, an Assistant Professor in the Department of Electrical Engineering and Computer Sciences, defines immersive photography as “reality-capturing systems that enable immersive photo-realistic experiences.” Such systems provide photo-realistic 3D scenes that users can explore with “6 Degrees of Freedom,” meaning they can look around 360 degrees and walk in any direction. Current 3D rendering technologies are hampered by limitations that won’t constrict the immersive photography system Kanazawa envisions.

“Existing photogrammetry techniques focus on recovering 3D geometry and thus result in partial reconstructions that are neither immersive nor robust,” says Kanazawa. “Our approach focuses on high quality appearance and immersive reconstructions. Users will be able to capture and render their full surroundings, indoors and outdoors, thereby providing a new and better way to preserve their reality.”

Q: What is the difference between immersive photography and VR/AR systems?

A: A VR or AR system is the medium through which a user can experience immersive content. Immersive photography generates the content users can experience on VR/AR systems.

Q: You have said that your new immersive photography system will have immediate applications in the gaming and film industries, which currently rely on photogrammetry to create 3D effects. What improvements will you offer?

A: Photogrammetry uses meshes and textures to render 3D scenes, but these techniques can only be applied to certain objects; demand time-consuming professional image-capturing of staged or static scenes; and frequently require post-processing to correct errors. We’re developing a comprehensive AI framework for practical photo-realistic capturing and rendering arbitrary scenes with dynamic appearance, including highly complex objects and effects such as glass, water and fog. Our goal is for consumers to be able to capture and view immersive photos using a cell phone or camera with no additional special equipment.

Q: What is the key to achieving this goal?

A: We’ve developed a technology we call Plenoxels for plenoptic voxels. Whereas a pixel is a 2D picture element and a voxel is a 3D volume element, a Plenoxel is a volume element that changes color depending on the angle from which it is viewed. Plenoxels evolved from an earlier 3D rendering technology, also developed at UC Berkeley, called NeRF for Neural Radiance Field.

Q: What is NeRF and how does it compare to your Plenoxels technology for immersive photography applications?

A: NeRFs are neural networks that can synthesize photo-realistic views of complex 3D scenes from a set of calibrated 2D images. Using classic techniques, NeRF can be used to render photorealistic 3D views of complex scenes. Although the quality is impressive, NeRF is computationally intense, can require a day to process a single scene, and cannot handle real-time rendering, making it impractical. Our Plenoxels technology can process a scene in minutes and our previous work, PlenOctrees, renders 3,000 times faster than NeRF, enabling real-time viewing experiences. Both were done by removing neural networks.

Q: In addition to games and films, what are some other possible applications for your immersive photography system?

A: For consumers, I think our immersive photography system could become as casual and accessible as photographs or videos. People will be able to conveniently capture their memories in 3D or even 4D using their mobile or AR/VR devices. The immersive photography system we’re developing could be a key content-provider for VR/AR displays, improve the safety of self-driving cars and the performance of robots, and be used in concert with other technologies for scientific research.

Q: If all goes well, how soon might we see some of these potential applications become commercial?

A: We’d love to hear from potential customers now!