Michael Yartsev

BioengineeringMichael Yartsev is an Associate Professor in the Departments of Bioengineering and Neuroscience.

Project Description

Re-Inventing the Wheel: Biologically Inspired Innovations for Autonomous Vehicles

Autonomous transportation is the way of the future. A necessary requirement of any autonomous vehicle is to sense and respond to objects in the external environment; both stationary, such as curbs and barriers, and in motion, such as pedestrians and vehicles. Present efforts focus on video-scene analysis, which is computationally expensive and highly challenged in cluttered environments. Michael Yartsev will take an alternative, biologically-inspired approach towards solving these problems by leveraging solutions obtained by biological systems. A major focus will be the bat whose flight activity can be analyzed individually at the level of neural activity and collectively as interactive behavior. These findings will be translated into computational algorithms that enable optimal processing of incoming sonar-based sensory inputs and uncover the most efficient “rules of the road” for the design and operation of autonomous vehicles.

Michael Yartsev’s Story

Fruit bats aren’t the first words that comes to mind when you think of driverless cars. But in their nightly forays for fruit and nectar, they routinely solve many of the engineering challenges that have stalled efforts to develop safe, reliable and efficient autonomous vehicles.

The bats’ navigation system was designed by the world’s top engineer: evolution. Michael Yartsev, Assistant Professor of Bioengineering and Neuroscience, studies the patterns of wiring and firing in the bats’ brains that nature has devised to get them from here to there in the pitch dark. And without flying into obstacles or each other.

The Bakar Fellows Program supports a new effort in his lab to translate the bats’ neurological “rules of the road” into computational algorithms to guide development of navigation systems for driverless cars.

Dr. Yartsev describes the neurobiological principles his lab has uncovered and how the insights may provide a roadmap to the future.

Q. How did you decide to focus on bat echolocation as a model for engineering autonomous vehicles?

A. I began working with fruit bats for my PhD in Israel. I was interested in the neural basis of spatial representation and navigation, and echolocating bats are a wonderful system for exploring this.

They can detect objects at a very fine resolution, while flying at speeds up to 100 miles per hour. They’ve evolved superior abilities for precise sensing, perception and movement – not only as individuals, but also as part of a group.

Q. How did this fundamental neuroscience research lead you to driverless cars?

A. A few years ago, I began learning about the autonomous vehicle industry, and I realized there is a lot we could potentially contribute. But it only really got practical with the support of my Bakar Fellowship.

Q. Are these bats truly blind as the saying goes?

A. No, the whole phrase “blind as a bat” is wrong. Our bats – Egyptian fruit bats – also have a highly developed visual system. They are pretty amazing at both echolocation and visual acuity. They use echolocation to navigate at night.

Q. How do you go about studying bats in the dark?

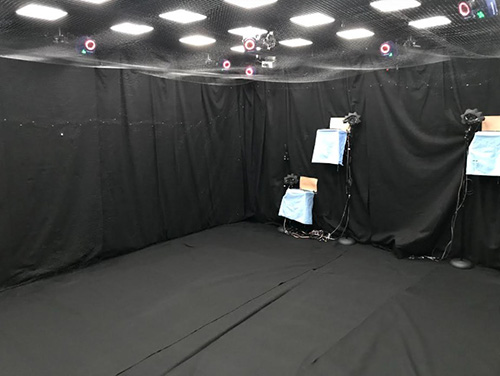

A. We’ve designed fully-automated flight rooms where bats can fly freely. We study their sonar patterns using ultrasonic microphones. We’re detecting their own transmissions – their echolocating clicks. The whole system is wireless.

Q. Getting from point A to point B is just half of the challenge for autonomous cars, isn’t it?

A. Yes, that’s right. Autonomous vehicles need to navigate with precision, but also respond to traffic conditions – to the proximity, speed and direction of other driverless cars. This is what we call collective behavior. Current technology has not figured out how to solve the problem of communication between vehicles. The cars are treated as individuals navigating their environment.

To study this more complex capability, we can have bats fly together and navigate to their targets.

Q. What have you learned about the wiring in their brains that enables them to collectively fly blind?

A. Recent efforts that our lab has been involved in managed to map large portions of the cortex of the bat brain. We’ve been able to identify the precise location of the neuron signaling and perception centers for echolocation. Using wireless neurophysiological systems we can record the neural signals from those areas.

Further more, when we began looking at the neurological behavior of bats interacting as groups, we were surprised to find that they have an interesting level of inter-brain synchronicity. There is a particular frequency range in the brain activity where this synchrony becomes most pronounced.

This presumably provides the optimum balance between signal strength and speed to navigate and communicate nearly flawlessly. The same problem will need to be solved when many autonomous vehicles are on the road. They need to effectively communicate information to one another and we currently do not know what is the most optimal way to do so. Evolution might shed important light on this.

Q. How could this finding inform development of autonomous vehicles?

A. It may guide us to identify the best sonar frequency as well as the optimal frequency band for cars to communicate with each other most effectively. For self-driving cars, you don’t want a navigation system that is 95% accurate. You need 99.99999999%. You need a Ferrari level of precision, not a budget car.

Each digit above 99% is computationally costly to develop. Visual sensors with that level of precision would be very costly. But visual i.d. is also important. We see the two modalities as complementary.

Q. How has the autonomous vehicle industry responded to your work?

A. I’ve never worked a day of my life in industry. The Bakar Fellows Program is allowing me have a back-and-forth with the autonomous vehicle developers. It can focus our research so we can really make a contribution. Without that feedback, we’d be sort of spinning our wheels.